Mysql

之前在hadoop100上已经安装过Mysql了,是在部署Hive时安装的,但是为了给Hive提供元数据的储存。但由于快照回退,我这边重新装了遍Mysql

安装

从Mysql官网下载RPM Bundle,也就是rpm的捆绑包,解压后里面有很多rpm文件。

我这里下载的是mysql-5.7.28-1.el7.x86_64.rpm-bundle.tar,将其上传到/opt/software

# 全都用cm用户来操作

su cm

mkdir mysql_lib

# 解压

tar -xf /opt/software/mysql-5.7.28-1.el7.x86_64.rpm-bundle.tar -C /opt/software/mysql_lib/

# 卸载系统自带的mariadb

sudo rpm -qa | grep mariadb | xargs sudo rpm -e --nodeps

cd mysql_lib

# 依次按照以下顺序安装rpm包

sudo rpm -ivh mysql-community-common-5.7.28-1.el7.x86_64.rpm

sudo rpm -ivh mysql-community-libs-5.7.28-1.el7.x86_64.rpm

sudo rpm -ivh mysql-community-libs-compat-5.7.28-1.el7.x86_64.rpm

sudo rpm -ivh mysql-community-client-5.7.28-1.el7.x86_64.rpm

sudo rpm -ivh mysql-community-server-5.7.28-1.el7.x86_64.rpm

# 安装mysql-community-server若出现以下错误

warning: 05_mysql-community-server-5.7.16-1.el7.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 5072e1f5: NOKEY

error: Failed dependencies:

libaio.so.1()(64bit) is needed by mysql-community-server-5.7.16-1.el7.x86_64

# 解决办法:

sudo yum -y install libaio

# 开机自启动服务

sudo systemctl enable mysqld

sudo systemctl start mysqld

配置Mysql

主要是配置密码

# 查看MySQL密码

sudo cat /var/log/mysqld.log | grep password

# 用上述看到的密码进入Mysql的CLI

mysql -uroot -p'<origin_mysql_password>'

# 更改MySQL密码策略(不更改无法设置简单的密码)

mysql> set global validate_password_policy=0;

mysql> set global validate_password_length=4;

# 设置简单好记的密码

mysql> set password=password("123456");

# 进入MySQL库

mysql> use mysql

# 查询user表

mysql> select user, host from user;

# 修改user表,把Host表内容修改为%

mysql> update user set host="%" where user="root";

# 刷新

mysql> flush privileges;

# 退出

mysql> quit;

Kafka 环境准备

# 关闭 Kafka 集群

kf.sh stop

# 修改/opt/module/kafka-3.0.0/bin/kafka-server-start.sh 命令中

vim /opt/module/kafka-3.0.0/bin/kafka-server-start.sh

# 旧配置:vim内搜索定位到这一段

if [ "x$KAFKA_HEAP_OPTS" = "x" ]; then

export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G"

fi

# 新配置:替换上述旧配置

if [ "x$KAFKA_HEAP_OPTS" = "x" ]; then

export KAFKA_HEAP_OPTS="-server -Xms2G -Xmx2G -XX:PermSize=128m -XX:+UseG1GC -XX:MaxGCPauseMillis=200 -XX:ParallelGCThreads=8 -XX:ConcGCThreads=5 -XX:InitiatingHeapOccupancyPercent=70"

export JMX_PORT="9999"

#export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G"

fi

# 修改之后在启动 Kafka 之前要分发之其他节点

xsync /opt/module/kafka-3.0.0/bin/kafka-server-start.sh

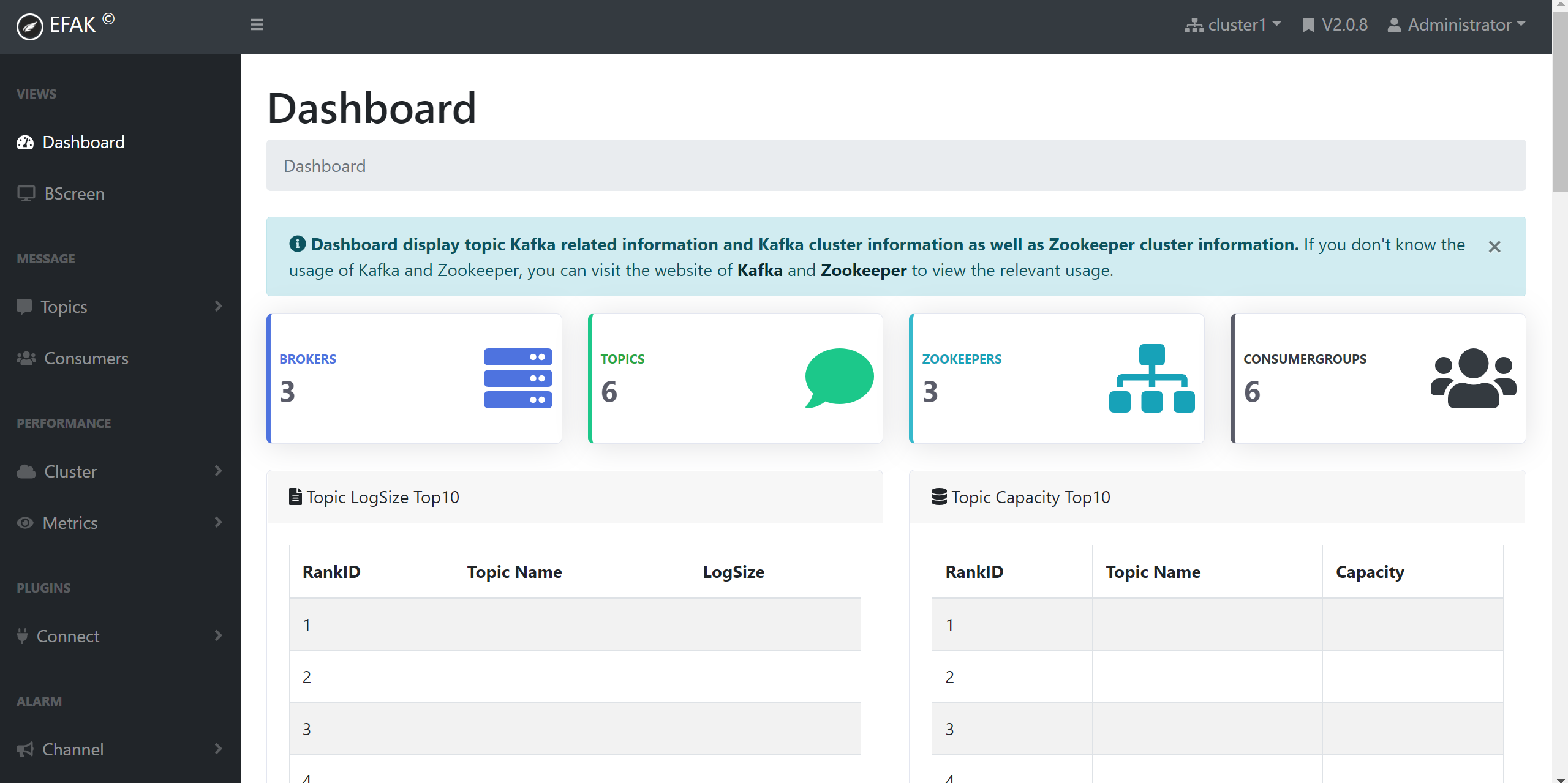

Kafka-Eagle 安装

官网下载安装包,上传到hadoop100的/opt/software 目录

cd /opt/software

tar -zxvf kafka-eagle-bin-2.0.8.tar.gz

cd kafka-eagle-bin-2.0.8

tar -zxvf efak-web-2.0.8-bin.tar.gz -C /opt/module/

cd /opt/module

mv efak-web-2.0.8 efak-2.0.8

配置环境变量

sudo vim /etc/profile

# 添加如下配置

# KAFKA_EFAK_HOME

export KE_HOME=/opt/module/efak-2.0.8

export PATH=$PATH:$KE_HOME/bin

source /etc/profile

修改配置文件

vim /opt/module/efak-2.0.8/conf/system-config.properties

# 完整配置如下

######################################

# multi zookeeper & kafka cluster list

# Settings prefixed with 'kafka.eagle.' will be deprecated, use 'efak.' instead

######################################

# 为zk集群起别名

efak.zk.cluster.alias=cluster1

# zk集群的connect地址

cluster1.zk.list=hadoop100:2181,hadoop101:2181,hadoop102:2181/kafka

######################################

# zookeeper enable acl

######################################

cluster1.zk.acl.enable=false

cluster1.zk.acl.schema=digest

cluster1.zk.acl.username=test

cluster1.zk.acl.password=test123

######################################

# broker size online list

######################################

cluster1.efak.broker.size=20

######################################

# zk client thread limit

######################################

kafka.zk.limit.size=32

######################################

# EFAK webui port

######################################

efak.webui.port=8048

######################################

# kafka jmx acl and ssl authenticate

######################################

cluster1.efak.jmx.acl=false

cluster1.efak.jmx.user=keadmin

cluster1.efak.jmx.password=keadmin123

cluster1.efak.jmx.ssl=false

cluster1.efak.jmx.truststore.location=/data/ssl/certificates/kafka.truststore

cluster1.efak.jmx.truststore.password=ke123456

######################################

# kafka offset storage

######################################

# offset 保存在 kafka

cluster1.efak.offset.storage=kafka

######################################

# kafka jmx uri

######################################

cluster1.efak.jmx.uri=service:jmx:rmi:///jndi/rmi://%s/jmxrmi

######################################

# kafka metrics, 15 days by default

######################################

efak.metrics.charts=true

efak.metrics.retain=15

######################################

# kafka sql topic records max

######################################

efak.sql.topic.records.max=5000

efak.sql.topic.preview.records.max=10

######################################

# delete kafka topic token

######################################

efak.topic.token=keadmin

######################################

# kafka sasl authenticate

######################################

cluster1.efak.sasl.enable=false

cluster1.efak.sasl.protocol=SASL_PLAINTEXT

cluster1.efak.sasl.mechanism=SCRAM-SHA-256

cluster1.efak.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramL

oginModule required username="kafka" password="kafka-eagle";

cluster1.efak.sasl.client.id=

cluster1.efak.blacklist.topics=

cluster1.efak.sasl.cgroup.enable=false

cluster1.efak.sasl.cgroup.topics=

cluster2.efak.sasl.enable=false

cluster2.efak.sasl.protocol=SASL_PLAINTEXT

cluster2.efak.sasl.mechanism=PLAIN

cluster2.efak.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainL

oginModule required username="kafka" password="kafka-eagle";

cluster2.efak.sasl.client.id=

cluster2.efak.blacklist.topics=

cluster2.efak.sasl.cgroup.enable=false

cluster2.efak.sasl.cgroup.topics=

######################################

# kafka ssl authenticate

######################################

cluster3.efak.ssl.enable=false

cluster3.efak.ssl.protocol=SSL

cluster3.efak.ssl.truststore.location=

cluster3.efak.ssl.truststore.password=

cluster3.efak.ssl.keystore.location=

cluster3.efak.ssl.keystore.password=

cluster3.efak.ssl.key.password=

cluster3.efak.ssl.endpoint.identification.algorithm=https

cluster3.efak.blacklist.topics=

cluster3.efak.ssl.cgroup.enable=false

cluster3.efak.ssl.cgroup.topics=

######################################

# kafka sqlite jdbc driver address

######################################

# 配置 mysql 连接

efak.driver=com.mysql.jdbc.Driver

efak.url=jdbc:mysql://hadoop100:3306/ke?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull

# 数据库用户名

efak.username=root

# 数据库用户密码

efak.password=123456

######################################

# kafka mysql jdbc driver address

######################################

#efak.driver=com.mysql.cj.jdbc.Driver

#efak.url=jdbc:mysql://127.0.0.1:3306/ke?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull

#efak.username=root

#efak.password=123456

启动

注意: 启动之前需要先启动 ZK 以及 KAFKA。

zk.sh start

kf.sh start

启动 efak

ke.sh start

停止 efak

ke.sh stop

Kafka-Eagle 页面操作

登录web页面查看监控数据

- 账号:admin

- 密码:123456

评论区